程序运行在云服务器上, Ubuntu 20.04LTS 系统,用浏览器测试能正常打开页面,请求一般的 html 文本和几十 kb 的小图片无问题,接着放了一个 1.63MB ( 1714387 字节)的网上找的图过去,客户端图没加载完就自动断连了,应用上看没问题,但我的设计是响应默认Connection: keep-alive, 客户端自动断连了明显跟设计不符,遂开始 debug 。

首先排除 SIGPIPE ,因为自己 strace 并没有看到有 sigpipe,再者客端断后服务器依然正常。strace 一看是客户端自己断连触发了服务端EventLoop上的 EPOLLRDHUP 事件,到这就开始盲区搞不懂了。

自己之后瞎搞了半天,改函数打日志什么的就不说了。

自己还了写客户端模拟发送 HTTP 报文测试,显示 normal close ,读了 80000 多字节,喂喂你都没有读完耶,怎么就读到 0 了?

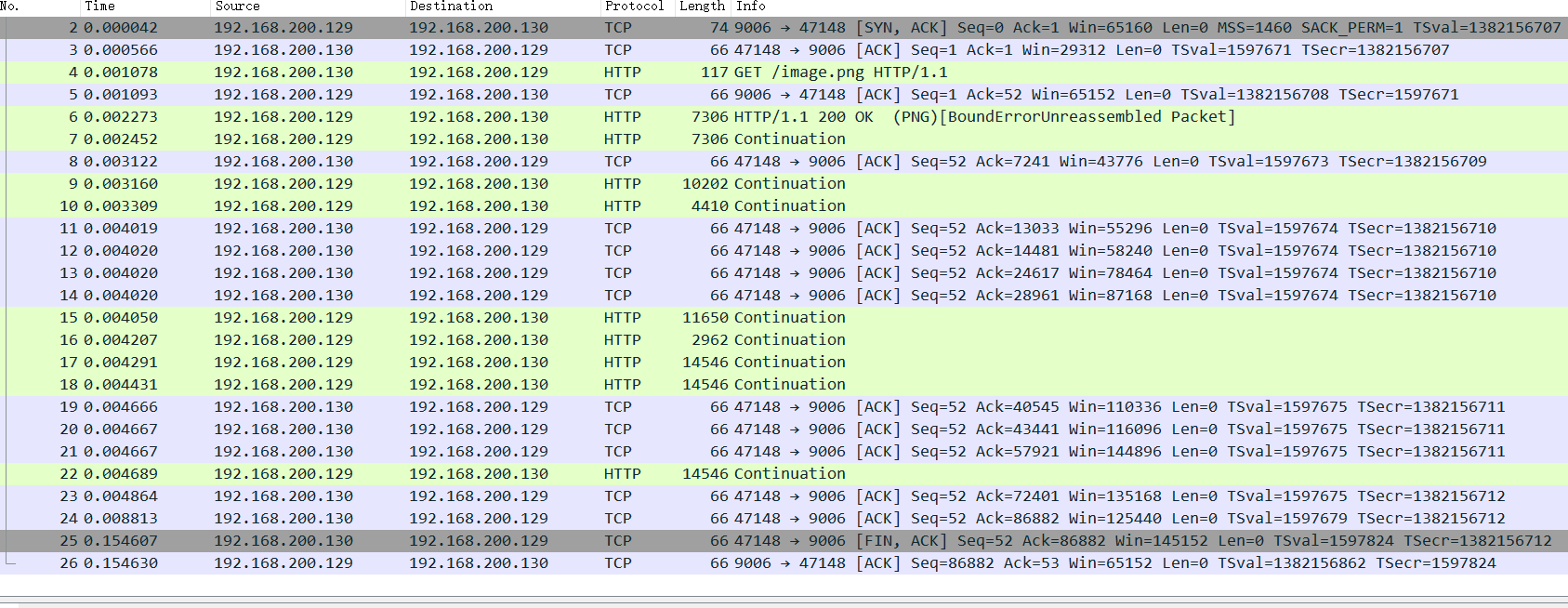

int main(int argc, char *argv[]){ const char *ip = argv[1]; int port = atoi(argv[2]); if(argc < 3){ printf("usage:%s ip port\n", argv[0]); return; } int sockfd = socket(PF_INET, SOCK_STREAM, 0); assert(sockfd != -1); struct sockaddr_in serv_addr; socklen_t serv_addr_len = sizeof(serv_addr); serv_addr.sin_family = AF_INET; serv_addr.sin_addr.s_addr = inet_addr(ip); serv_addr.sin_port = htons(port); assert(connect(sockfd, (sockaddr*)&serv_addr, serv_addr_len) != -1); char req[1024] = {0}; const char *path = "image.png"; snprintf(req, 45 + strlen(path),"GET /%s HTTP/1.1\r\nConnection: keep-alive\r\n\r\n",path); char buf[1024] = {0}; size_t wlen = -1; size_t nread = 0; do{ size_t wlen = write(sockfd,req, strlen(req)); printf("write: %s\n", req); size_t rlen = read(sockfd, buf, sizeof(buf)); buf[rlen] = 0; nread += rlen; printf("read: %s\n", buf); if(rlen > 0){ } else if(rlen == 0){ perror("normal close"); break; } else{ perror("read error:"); break; } }while(wlen > 0); printf("total read: %d\n", nread); close(sockfd); return 0; } 把服务端和客户端分别抽到两个虚拟机排除 SSL 的因素, 然后两个端设 tcpdump 抓包输出文件,结果 wireshark 一看还是客户端自己给服务端发了 FIN 报文,始终搞不懂为什么。希望有大佬给出建议打破我的 unknown unknown 。

整个写响应流程涉及的代码如下:

void WebServer::EventLoop(){ int timeoutMS = -1; while(1){ int nevent = epoll_wait(epollfd_, events_, MAX_EVENTS, timeoutMS); for(int i = 0; i < nevent; ++i){ int sockfd = events_[i].data.fd; uint32_t events = events_[i].events; if(sockfd == listenfd_){ //add new connection dealNewConn(); } else if(events & (EPOLLRDHUP | EPOLLHUP | EPOLLERR)){ assert(connMap_[sockfd]); dealCloseConn(connMap_[sockfd]); } else if(events & EPOLLIN){ //read request/close connection assert(connMap_[sockfd]); dealRead(connMap_[sockfd]); } else if(events & EPOLLOUT){ //send response assert(connMap_[sockfd]); dealWrite_debug(connMap_[sockfd]); } } } } void WebServer::dealWrite_debug(const HttpConnectionPtr& client){ //正常这里应该是扔线程池,这里 debug 排除了多线程干扰 onWrite_debug(client); } void WebServer::onWrite_debug(const HttpConnectionPtr& client){ //确保用于写的连接还在,防止 SF,连接用智能指针管理时尤其注意! assert(client); int writeErrno = 0; ssize_t nwrite = client->writeOut_debug(&writeErrno); const string& clientAddr = client->getClientAddr().getIpPort(); printf("write %ld byte to %s\n",nwrite,clientAddr.c_str()); if(client->toWriteBytes() == 0){ //传输完成 if(client->isKeepAlive()){ onProcess(client); return; } } else{ //传输未完成, 场景包括:正在写的时候对端断开连接 if(writeErrno == EAGAIN || writeErrno == EWOULDBLOCK){ epoll_modfd(epollfd_, client->getSocket(), EPOLLOUT | connEvent_); return; } } dealCloseConn(client); } void WebServer::dealCloseConn(const HttpConnectionPtr& client){ int cOnnfd= client->getSocket(); perror("status"); epoll_delfd(epollfd_, connfd); connMap_.erase(connfd); } ssize_t HttpConnection::writeOut_debug(int *saveErrno){ printf("Buffer write: \n"); cout << string(outbuffer_.peek(), outbuffer_.readableBytes()) << endl; ssize_t len = -1; ssize_t nwrite = 0; do{ len = writev(sockfd_, iov_, iovCnt_); if(iov_[0].iov_len + iov_[1].iov_len == 0){ break; } if(len <= 0){ saveErrno = &errno; } else if(static_cast<size_t>(len) <= iov_[0].iov_len){ iov_[0].iov_base = (uint8_t*) iov_[0].iov_base + len; iov_[0].iov_len -= len; outbuffer_.retrieve(len); } else{ iov_[1].iov_base = (uint8_t*) iov_[1].iov_base + (len - iov_[0].iov_len); iov_[1].iov_len -= (len - iov_[0].iov_len); if(iov_[0].iov_len) { outbuffer_.retrieveAll(); iov_[0].iov_len = 0; } } nwrite += len; cout << "write: " << len << " " << "write bytes left: " << toWriteBytes() << endl; }while(HttpConnection::isET && toWriteBytes() > 1024); return nwrite; } //write_debug 写响应操作前置的流程函数 bool HttpConnection::process(){ if(inbuffer_.readableBytes() <= 0) return false; HttpRequest req; if(!httpParser_.parseRequest(inbuffer_,req)) return false; assert(inbuffer_.readableBytes() == 0); inbuffer_.retrieveAll(); string headkey = req.getHeader("Connection"); if(headkey == string("keep-alive")){ keepAlive_ = true; } else if(headkey == string("close")){ close_ = true; } HttpResponse resp(close_); makeResponse(req,resp); return true; } void HttpConnection::makeResponse(HttpRequest& req,HttpResponse& resp){ string path(srcDir_); string file = (req.getPath() == "/") ? "/index.html" : req.getPath(); iovCnt_ = 1; cout << "path: " << path + file << endl; struct stat statbuf; if(stat(string(path + file).data(),&statbuf) >= 0 && !S_ISDIR(statbuf.st_mode)){ iovCnt_++; int filefd = open(string(path + file).data(), O_RDONLY); fileLen_ = statbuf.st_size; mmFile_ = mmap(0, fileLen_, PROT_READ, MAP_PRIVATE, filefd, 0); assert(mmFile_ != (void*)-1); close(filefd); resp.setFile(true); } if(resp.isHaveFile()){ resp.setLine(HttpResponse::k200Ok); resp.setContentType(MimeType::getFileType(file)); resp.setContentLength(fileLen_); } else{ resp.setLine(HttpResponse::k404NotFound); resp.setCloseConnection(true); } resp.appendAllToBuffer(outbuffer_); iov_[0].iov_base = const_cast<char*>(outbuffer_.peek()); iov_[0].iov_len = outbuffer_.readableBytes(); iov_[1].iov_base = mmFile_; iov_[1].iov_len = fileLen_; } HttpConnection 类头部涉及部分:

class HttpConnection : noncopyable{ public: HttpConnection(int sockfd, const INetAddress& peerAddr, const INetAddress& hostAddr); ~HttpConnection() { onClose();} ssize_t readIn(int* saveErrno); bool process(); 。。。。 public: static bool isET; static const char* srcDir_; private: void makeResponse(HttpRequest&,HttpResponse&); 。。。 void *mmFile_; size_t fileLen_; struct iovec iov_[2]; size_t iovCnt_; Buffer inbuffer_; Buffer outbuffer_; HttpParser httpParser_; }; 输出效果如下:

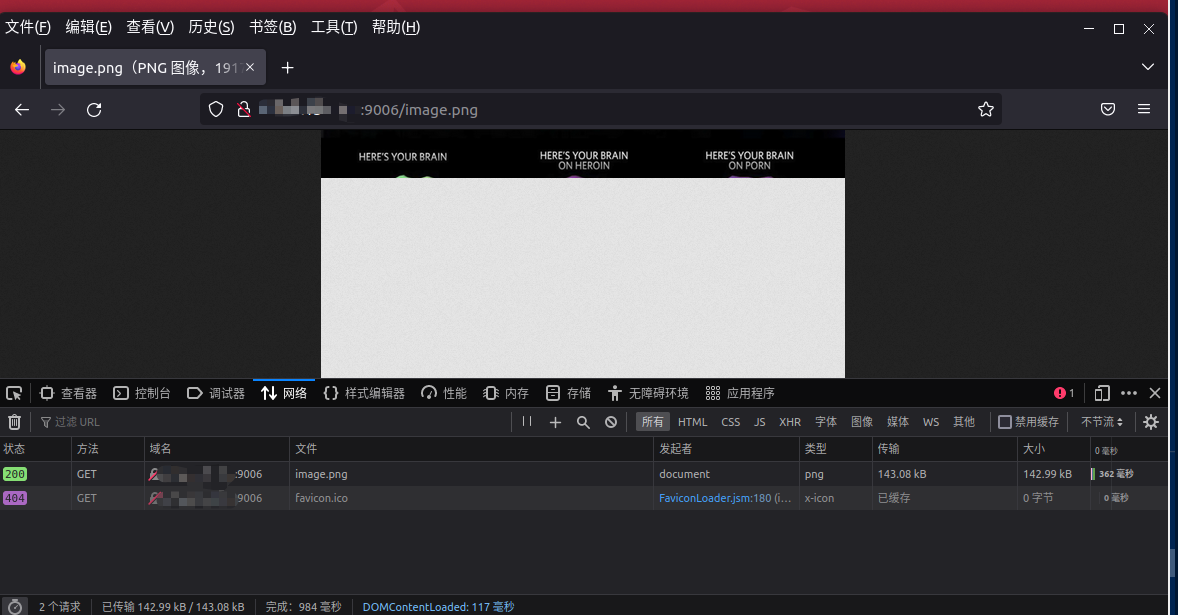

# Buffer read 的输出函数没包括在给出的代码里面 算补充吧 Buffer read: GET /image.png HTTP/1.1 Host: sss.sss.sss.sss:9006 User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:108.0) Gecko/20100101 Firefox/108.0 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.8 Accept-Language: zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2 Accept-Encoding: gzip, deflate Connection: keep-alive Upgrade-Insecure-Requests: 1 # 正式打印部分 path: /home/LinuxC++/Project/re_webserver/root/image.png Buffer write: HTTP/1.1 200 OK Connection: keep-alive Content-Length: 1714387 Content-Type: image/png write: 143080 to write bytes left: 1571400 write 143080 byte to ccc.ccc.ccc.ccc:35521 status: Invalid argument connection ccc.ccc.ccc.ccc:35521 -> sss.sss.sss.sss:9006 closed connection ccc.ccc.ccc.ccc:35550 -> sss.sss.sss.sss:9006 抓包截图:( 192.168.200.129 为服务端 192.168.200.130 为客户端)

Note: 发现不同浏览器请求的行为不一样,Chrome 除了请求页面还请求 favicon.ico ,而且似乎会自动检测是不是静态资源,是就自动断连,估计是我 Chrome 上插件的影响,Firefox 就不会,后面的输出结果以 Firefox 为准。注意是 Linux 上的 Firefox ,试过 Windows 上的 Firefox ,不知为什么原因请求完资源后客端也会自动断连。

真的是知其然而所以然

1 ysc3839 2023-01-13 20:00:35 +08:00 via Android snprintf 第二个参数是缓冲区的最大长度,不是让你计算字符串长度,是用来避免缓冲区溢出的,如果此时 path 长度超过 1024-45 则会造成缓冲区溢出 |

2 Monad 2023-01-13 23:56:31 +08:00 via iPhone op 分享一下原因呀 |

3 lambdaq 2023-01-14 00:02:10 +08:00 贴一下 curl -v 的结果? 盲猜是 MTU 问题。2333 |

4 tracker647 OP @Monad ssize_t HttpConnection::writeOut_debug 有一行是读完了保存状态码的操作,不小心写成了 if(len <= 0){ saveErrno = &errno; } 应该是 *saveErrno = errno; 的 这行直接导致后面 onWrite_debug 函数的错误码检测失效了, 最后就跳转到了下面的 dealcloseConn 函数。 只能说抄实现要么全部 CV 要么全部默写要么自主创新,枯了。 |

5 Monad 2023-01-14 14:25:12 +08:00 via Android @tracker647 看到了 感谢分享 |

6 documentzhangx66 2023-01-14 21:03:20 +08:00 我觉得是开发思路问题。 这年代为啥还要手写,直接用 protocol buffer + grpc 不香嘛? |