相关资料 使用 OpenCV 二进制掩码检测轮盘和球速 https://www.linkedin.com/pulse/roulette-wheel-ball-speed-detection-opencv-binary-mask-muhammad-anas-nqy4f

https://www.youtube.com/watch?v=bpy933SQ6Q0 https://www.youtube.com/watch?v=kibZDD_I9HY https://www.youtube.com/watch?v=HaMlKvNqCVs https://www.spinsight.ai/

]]>

void calibrateHandEye( InputArrayOfArrays R_gripper2base, // 云台 p y r 角度转换出来的旋转矩阵 InputArrayOfArrays t_gripper2base, // 输入的是 0 ,因为没有任何移动(并且想用云台平台当世界坐标中心点) InputArrayOfArrays R_target2cam, // calibrateCamera 输出的 rvec InputArrayOfArrays t_target2cam, // calibrateCamera 输出的 tvec OutputArray R_cam2gripper, OutputArray t_cam2gripper, HandEyeCalibrationMethod method = CALIB_HAND_EYE_TSAI) 我现在是这样做的:

- 将云台的 p y r 旋转到不同角度,拍摄棋盘格的照片,同时记录该时刻的 p y r 角旋转角度;

- 使用 calibrateCamera 得到每一张图片里棋盘格的 tvec 和 rvec ;

- 将记录的云台 p y r 角度转换为旋转矩阵;

- 调用 calibrateHandEye 。

但是结果和实际相差巨大。因此想来 V 站看看有没有人有过这方面经验,能看出我的步骤里可能有什么问题……先在这里谢过各位了!

我个人感觉比较容易出问题的地方是第三步的转换,我是这样写的:

Eigen::Quaternionf euler2quaternionf(const float z, const float y, const float x) { const float cos_z = cos(z * 0.5f), sin_z = sin(z * 0.5f), cos_y = cos(y * 0.5f), sin_y = sin(y * 0.5f), cos_x = cos(x * 0.5f), sin_x = sin(x * 0.5f); Eigen::Quaternionf quaternion( cos_z * cos_y * cos_x + sin_z * sin_y * sin_x, cos_z * cos_y * sin_x - sin_z * sin_y * cos_x, sin_z * cos_y * sin_x + cos_z * sin_y * cos_x, sin_z * cos_y * cos_x - cos_z * sin_y * sin_x ); return quaternion; } 先转换为四元数,再求旋转矩阵(用 Eigen 自带的方法)。

]]>测试代码如下:

#include <opencv2/opencv.hpp> #include <iostream> int main(int argc, char** argv) { cv::Mat image = cv::imread("image.jpg"); if (image.empty()) { std::cout << "Error loading image!" << std::endl; return -1; } // cv::imshow("Image", image); std::cout << "size: " << image.cols << "x" << image.rows << std::endl; return 0; } c++ -o cv-test cv-test.cc -I/usr/local/opencv/include/opencv4/ -L/usr/local/opencv/lib64/ -lopencv_core -lopencv_imgcodecs

正常编译

加上 -static尝试静态编译 (opencv 是有编译静态库的/usr/local/opencv/lib64/libopencv_core.a)

c++ -o cv-test cv-test.cc -I/usr/local/opencv/include/opencv4/ -L/usr/local/opencv/lib64/ -lopencv_core -lopencv_imgcodecs -static

报错:

/usr/bin/ld: /usr/local/opencv/lib64//libopencv_core.a(opencl_core.cpp.o): in function `opencl_check_fn(int)': /home/nick/github/opencv/modules/core/src/opencl/runtime/opencl_core.cpp:166: warning: Using 'dlopen' in statically linked applications requires at runtime the shared libraries from the glibc version used for linking /usr/bin/ld: /usr/local/opencv/lib64//libopencv_core.a(matrix_transform.cpp.o): in function `ipp::IwiImage::Release()': /home/nick/github/opencv/build/3rdparty/ippicv/ippicv_lnx/iw/include/iw++/iw_image.hpp:945: undefined reference to `iwAtomic_AddInt' /usr/bin/ld: /usr/local/opencv/lib64//libopencv_core.a(matrix_transform.cpp.o): in function `ipp::IwiImage::~IwiImage()': /home/nick/github/opencv/build/3rdparty/ippicv/ippicv_lnx/iw/include/iw++/iw_image.hpp:813: undefined reference to `iwAtomic_AddInt' /usr/bin/ld: /usr/local/opencv/lib64//libopencv_core.a(matrix_transform.cpp.o): in function `ipp::IwiImage::Release()': /home/nick/github/opencv/build/3rdparty/ippicv/ippicv_lnx/iw/include/iw++/iw_image.hpp:957: undefined reference to `iwiImage_Release' /usr/bin/ld: /usr/local/opencv/lib64//libopencv_core.a(matrix_transform.cpp.o): in function `ipp::IwException::IwException(int)': /home/nick/github/opencv/build/3rdparty/ippicv/ippicv_lnx/iw/include/iw++/iw_core.hpp:133: undefined reference to `iwGetStatusString' /usr/bin/ld: /usr/local/opencv/lib64//libopencv_core.a(matrix_transform.cpp.o): in function `cv::transpose(cv::_InputArray const&, cv::_OutputArray const&)': /home/nick/github/opencv/modules/core/src/matrix_transform.cpp:228: undefined reference to `ippicviTranspose_32f_C4R' /usr/bin/ld: /usr/local/opencv/lib64//libopencv_core.a(matrix_transform.cpp.o): in function `ipp_transpose': /home/nick/github/opencv/modules/core/src/matrix_transform.cpp:228: undefined reference to `ippicviTranspose_32s_C3R' /usr/bin/ld: /home/nick/github/opencv/modules/core/src/matrix_transform.cpp:228: undefined reference to `ippicviTranspose_16s_C3R' ... /usr/bin/ld: /usr/local/opencv/lib64//libopencv_imgcodecs.a(grfmt_webp.cpp.o): in function `std::__shared_count<(__gnu_cxx::_Lock_policy)2>::__shared_count<unsigned char*, void (*)(void*), std::allocator<void>, void>(unsigned char*, void (*)(void*), std::allocator<void>)': /usr/include/c++/13/bits/shared_ptr_base.h:958: undefined reference to `WebPFree' /usr/bin/ld: /usr/local/opencv/lib64//libopencv_imgcodecs.a(grfmt_webp.cpp.o): in function `cv::WebPEncoder::write(cv::Mat const&, std::vector<int, std::allocator<int> > const&)': /home/nick/github/opencv/modules/imgcodecs/src/grfmt_webp.cpp:286: undefined reference to `WebPEncodeLosslessBGRA' /usr/bin/ld: /usr/local/opencv/lib64//libopencv_imgcodecs.a(grfmt_webp.cpp.o): in function `std::_Sp_ebo_helper<0, void (*)(void*), false>::_Sp_ebo_helper(void (*&&)(void*))': /usr/include/c++/13/bits/shared_ptr_base.h:482: undefined reference to `WebPFree' /usr/bin/ld: /usr/local/opencv/lib64//libopencv_imgcodecs.a(grfmt_webp.cpp.o): in function `cv::WebPEncoder::write(cv::Mat const&, std::vector<int, std::allocator<int> > const&)': /home/nick/github/opencv/modules/imgcodecs/src/grfmt_webp.cpp:271: undefined reference to `cv::cvtColor(cv::_InputArray const&, cv::_OutputArray const&, int, int)' /usr/bin/ld: /home/nick/github/opencv/modules/imgcodecs/src/grfmt_webp.cpp:293: undefined reference to `WebPEncodeBGR' /usr/bin/ld: /home/nick/github/opencv/modules/imgcodecs/src/grfmt_webp.cpp:297: undefined reference to `WebPEncodeBGRA' /usr/bin/ld: /home/nick/github/opencv/modules/imgcodecs/src/grfmt_webp.cpp:282: undefined reference to `WebPEncodeLosslessBGR' /usr/bin/ld: cv-test: hidden symbol `opj_stream_destroy' isn't defined /usr/bin/ld: final link failed: bad value collect2: error: ld returned 1 exit status 请问大佬有没有办法静态编译

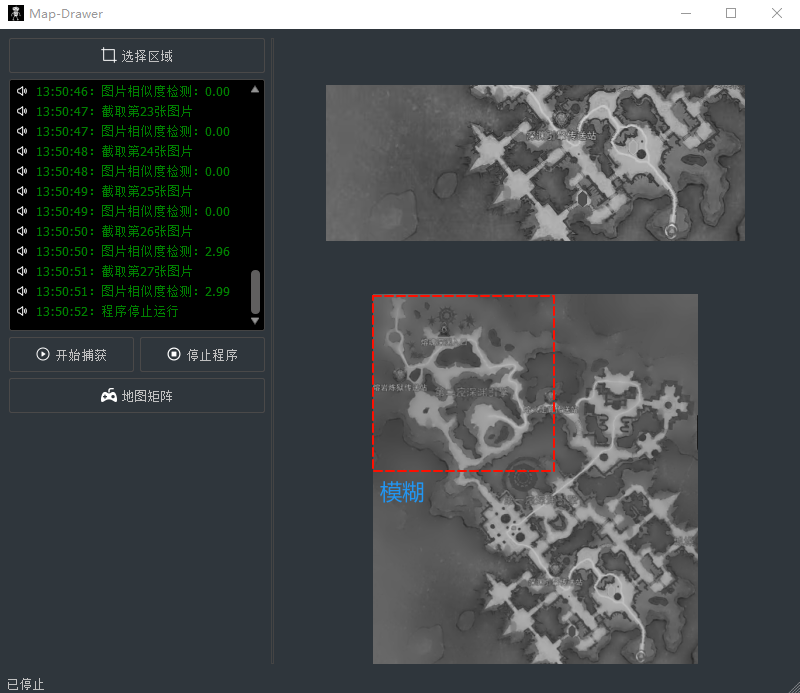

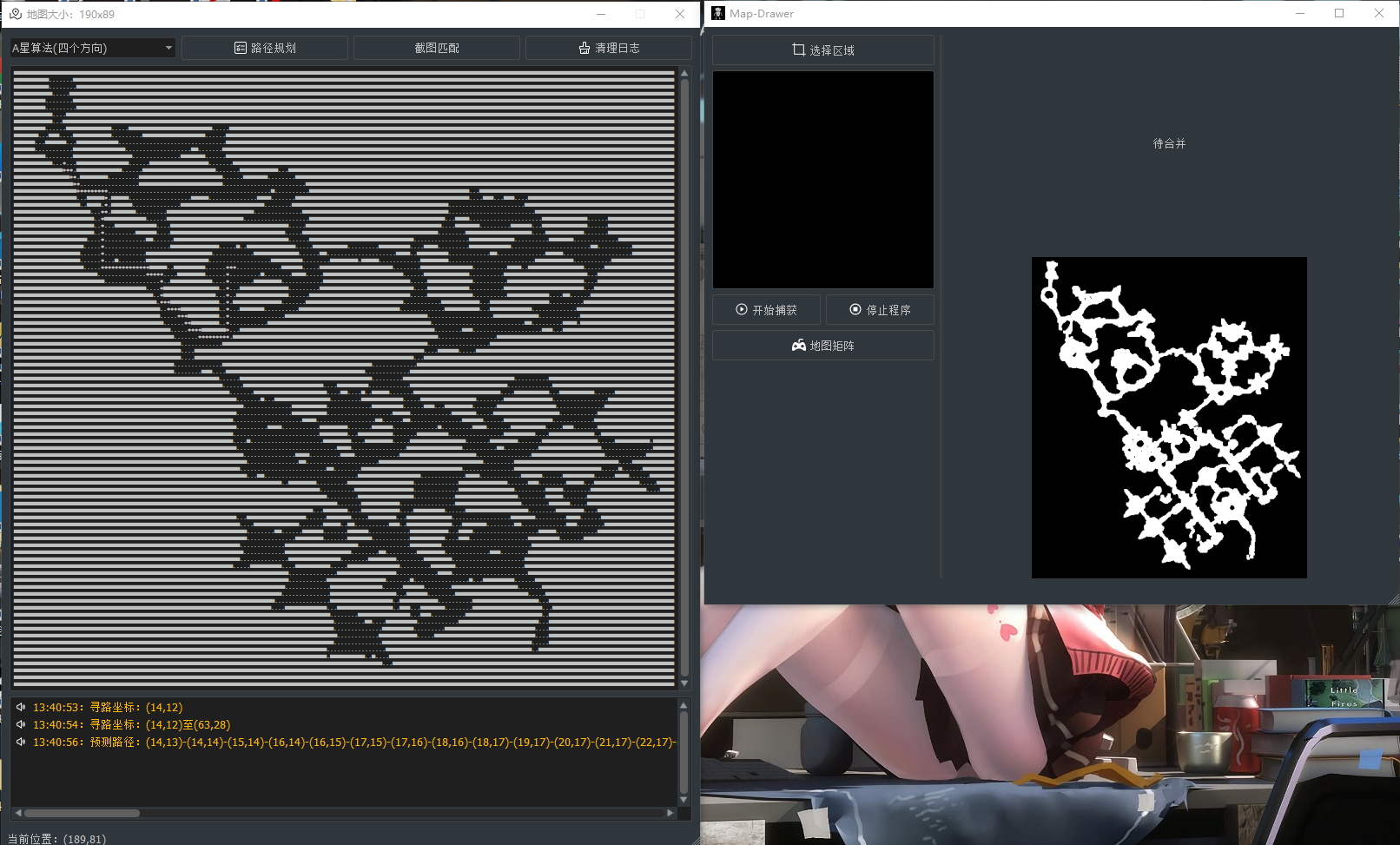

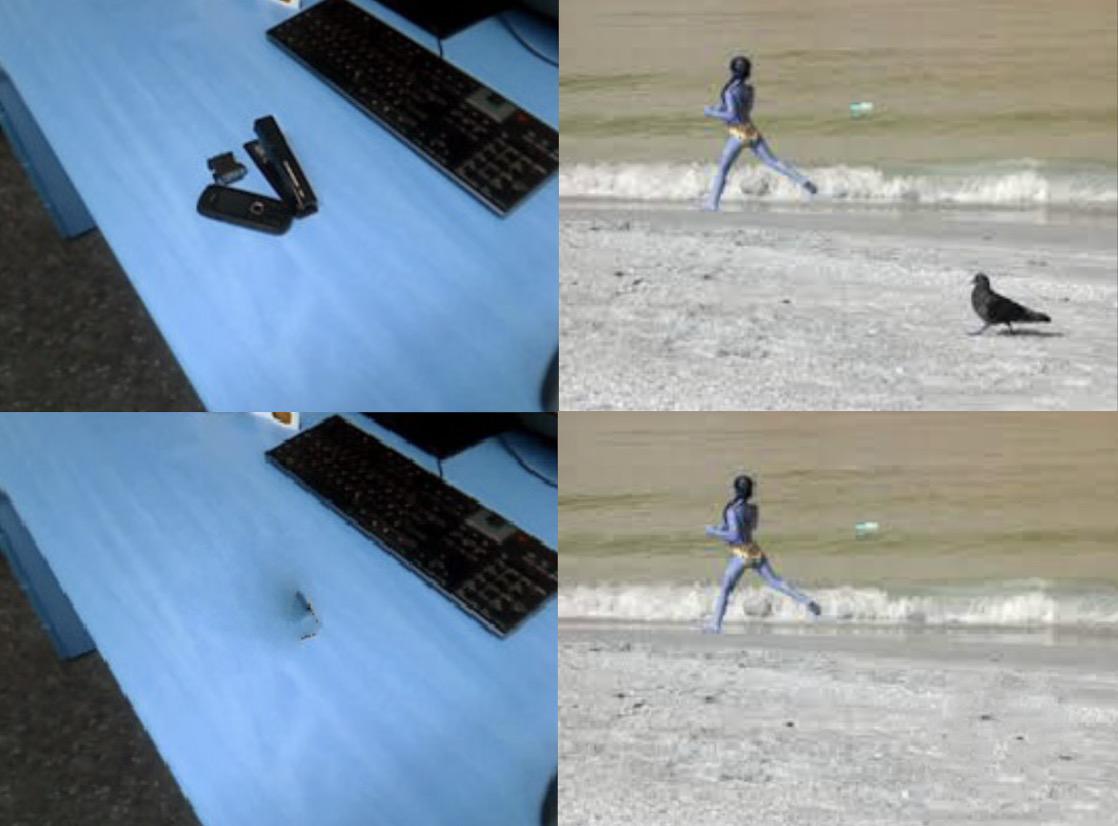

]]>目前的思路是先框选出来文字区域作为模板,再间隔几秒找一帧做模板匹配,如果匹配上,用相邻的未出现文字的帧的画面来替换有文字内容的帧,如果出现移动文字的画面也有其它移动的对象,这种方式就不行了,还有其它好的思路么,感谢!

示意图 1

示意图 1

示意图 2

示意图 2

# 检查摄像头是否打开成功 if not cap.isOpened(): raise Exception("Failed to open camera") # 图片为黑,必须 sleep 一下!!! sleep(0.1) # 读取一帧图像 ret, frame = cap.read() # 检查图像是否读取成功 if not ret: raise Exception("Failed to capture frame") # 释放摄像头 cap.release() # 保存图像到当前目录 cv2.imwrite("captured_photo.jpg", frame) return "Photo captured successfully" 测了下霍夫算法,好像时间太长了,而且准确度也没法保障。

求一个思路( opencv 不太熟,里面好像有 svm,cnn,dnn 啥的不知道是否合适),万分感谢~~~

]]>谢谢各位。 ]]>

cv::VideoCapture video; video.open("E:/20230612162536.MOV"); CV::Mat mat_1,mat_64F; video.read(mat_1); mat_1.converTo(mat_64F,CV_64F); cv::imshow("第一帧",mat_1); //正常显示了图片 cv::imshow("第一帧 64 位",mat_64F); //显示成纯白图片,大小和 mat_1 一样 我现在需要确保这些图片都是 64 位浮点,所以使用了 converTo()转换,但转换之后就变成纯白图片了

我想问下,如何转换 mov 个数的某一帧,确保这一帧图片的数据类型是 64 位浮点?

]]>

我的预期是, 取时间和另外两个维度,转换为一个 mp4 格式的视频。

我的思路,先将 nii 图片转换一个 2 维的数组,然后顺序拼成一个视频。

但是实际, 转换出来的视频画面失真,win11 系统播放器打开画面失真,qq 影音播放器打开提示视频失效。

源文件和转换后的文件预览地址

https://1drv.ms/u/s!Ah4q2HtKB2AWg4NnYXaMslribNVlWw?e=D8ScIs

代码

import os import cv2 import numpy as np def nii2imgs_dim(nii, dim) -> list: """ 将 nii 文件转换为多张图片,返回图片数组,不用中间保存,方便转换为视频 :param nii: nii 对象 :param dim: 要转换的维度 :return: 图片数组 """ imgs = [] data = nii for d3i in range(data.shape[3]): # 读取 3d 文件 d3img = data[:, :, :, d3i] # 获取 nii 文件的维度 d3img_shape = d3img.shape # 遍历每一张图片 for d2j in range(d3img_shape[2]): # 获取第 i 张图片 img = data[:, :, d2j, d3i] imgs.append(img) return imgs def imgs2mp4(img_list, save_path): """ 将图片数组转换为 mp4 :param img_list: 图片数组 :param save_path: 保存地址 :return: 空 """ # 判断 save_path 路径是否存在,如果不存在则创建 if not os.path.exists(save_path): os.makedirs(save_path) # 图片数组转换为 mp4 img, *imgs = img_list # *imgs 表示剩下的所有元素,img 表示第一个元素,目的是为了获取图片尺寸 print(img.shape) # img 转换为 float32 img = img.astype(np.float32) img = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR) height, width, layers = img.shape video = cv2.VideoWriter(save_path + "video.mp4", cv2.VideoWriter_fourcc(*'mp4v'), 1, (width, height)) video.write(img) for img in imgs: img = img.astype(np.float32) img = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR) video.write(img) video.release() img = nib.load(file_path) data = img.get_fdata() # nii 转换为多张图片 imgs = nii2imgs_dim(data, 3) # 将图片数组转换为 mp4 imgs2mp4(imgs, "./tmp/") 主要是用来做健身房核销会员

但是 实现起来 问题太多了 很多没办法解决 有同学一起来探讨嘛

v5324653

还有站长 是不是把我频闭了 我发不了主题了 ]]>